In this post let’s see how we can directly upload files to Amazon S3 which are sent through ASP.NET Web API service via a form object by client side. The nice thing here is we are not going to save the files locally while the upload is happening. File will be directly uploaded to Amazon S3.

Let’s go by a example. My client application is an AngularJS application. Here I am not going to write about how to consume ASP.NET Web API services from AngularJS application. Sometimes back I wrote an article about AngularJS Consuming ASP.NET Web API RESTful Services, you can refer that post for more information on AngularJS consuming ASP.NET Web API services.

I have modified the sample in AngularJS Consuming ASP.NET Web API RESTful Services post to send a POST request to ASP.NET Web API service, and the request contains the form object which contains the file to upload.

Here is my view template file. I have a file-select field to open the folder browser.

<div ng-controller="homeController">

<h1>{{message}}</h1>

<label for="">Image</label>

<input type="file" ng-file-select="onFileSelect($files)" accept="Image/*">

</div>

In my AngularJS Controller, I have the following.

'use strict';

app.controller('homeController', ['$scope', '$upload', function ($scope, $upload) {

$scope.message = "Now viewing home!";

$scope.fileUploadData = {

UserName: "Jaliya Udagedara"

};

$scope.onFileSelect = function ($files) {

for (var i = 0; i < $files.length; i++) {

var file = $files[i];

$scope.upload = $upload.upload({

url: '../api/employees/upload',

method: 'POST',

data: { UserName: $scope.fileUploadData.UserName },

file: file

}).progress(function (evt) {

console.log('percent: ' + parseInt(100.0 * evt.loaded / evt.total));

}).success(function (data, status, headers, config) {

console.log(data);

}).error(function (data) {

console.log(data);

});

}

};

}]);

If you are not familiar with AngularJS, you can just ignore the above part. Here basically what I have done is sending a POST request to my ASP.NET Web API service including the file I need to upload and some additional data.

Now let’s move into my ASP.NET Web API project. There I have a API Controller named “EmployeesController” and there I have a action named “Upload”.

[HttpPost]

[Route("Employees/Upload")]

public async Task<HttpResponseMessage> Upload()

{

StorageService storageService = new StorageService();

if (!Request.Content.IsMimeMultipartContent())

{

this.Request.CreateResponse(HttpStatusCode.UnsupportedMediaType);

}

InMemoryMultipartStreamProvider provider = await Request.Content.ReadAsMultipartAsync<InMemoryMultipartStreamProvider>(new InMemoryMultipartStreamProvider());

NameValueCollection formData = provider.FormData;

string userName = formData["UserName"];

IList<HttpContent> files = provider.Files;

HttpContent file = files[0];

Stream fileStream = await file.ReadAsStreamAsync();

storageService.UploadFile("your bucketname", userName, fileStream);

string preSignedUrl = storageService.GeneratePreSignedURL("your bucketname", userName, 3000);

return this.Request.CreateResponse(HttpStatusCode.OK, new { preSignedUrl });

}

In here first I am checking whether the requests’ content is MIME multipart content. If yes, I am reading all body parts within a MIME multipart message and produces a result of type InMemoryMultipartStreamProvider. In here InMemoryMultipartStreamProvider is my implementation of abstract class MultipartStreamProvider. There I have overrided the GetStream method just to return me a MemoryStream. The implementation of it is totally based on MultipartFormDataStreamProvider and MultipartFileStreamProvider. The MultipartFormDataStreamProvider has two constructors, one taking a root path and other taking root path and a buffer size. If you look at the implementation of MultipartFormDataStreamProvider, inside the GetStream method the files are saving locally. Here in my implementation I have removed them, as I don’t want that to happen.

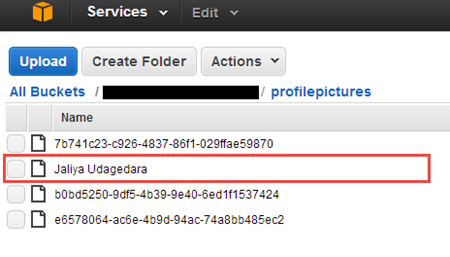

After getting the stream of the file, I have a helper class named “StorageService”, which is used to upload the file into Amazon S3. Amazon S3 SDK for .NET can upload files via a stream. After uploading is completed, I am returning the uploaded files’ Url.

InMemoryMultipartStreamProvider.cs

using System;

using System.Collections.Generic;

using System.Collections.ObjectModel;

using System.Collections.Specialized;

using System.IO;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Threading.Tasks;

public class InMemoryMultipartStreamProvider : MultipartStreamProvider

{

private NameValueCollection formData = new NameValueCollection();

private List<HttpContent> fileContents = new List<HttpContent>();

private Collection<bool> isFormData = new Collection<bool>();

public NameValueCollection FormData

{

get { return this.formData; }

}

public List<HttpContent> Files

{

get { return this.fileContents; }

}

public override Stream GetStream(HttpContent parent, HttpContentHeaders headers)

{

ContentDispositionHeaderValue contentDisposition = headers.ContentDisposition;

if (contentDisposition != null)

{

this.isFormData.Add(string.IsNullOrEmpty(contentDisposition.FileName));

return new MemoryStream();

}

throw new InvalidOperationException(string.Format("Did not find required '{0}' header field in MIME multipart body part.", "Content-Disposition"));

}

public override async Task ExecutePostProcessingAsync()

{

for (int index = 0; index < Contents.Count; index++)

{

if (this.isFormData[index])

{

HttpContent formContent = Contents[index];

ContentDispositionHeaderValue contentDisposition = formContent.Headers.ContentDisposition;

string formFieldName = UnquoteToken(contentDisposition.Name) ?? string.Empty;

string formFieldValue = await formContent.ReadAsStringAsync();

this.FormData.Add(formFieldName, formFieldValue);

}

else

{

this.fileContents.Add(this.Contents[index]);

}

}

}

private static string UnquoteToken(string token)

{

if (string.IsNullOrWhiteSpace(token))

{

return token;

}

if (token.StartsWith("\"", StringComparison.Ordinal) && token.EndsWith("\"", StringComparison.Ordinal) && token.Length > 1)

{

return token.Substring(1, token.Length - 2);

}

return token;

}

}

StorageService.cs

using Amazon;

using Amazon.S3;

using Amazon.S3.Model;

using Amazon.S3.Transfer;

using System;

using System.Configuration;

using System.IO;

public class StorageService

{

private IAmazonS3 client = null;

public StorageService()

{

string accessKey = ConfigurationManager.AppSettings["AWSAccessKey"];

string secretKey = ConfigurationManager.AppSettings["AWSSecretKey"];

if (this.client == null)

{

this.client = Amazon.AWSClientFactory.CreateAmazonS3Client(accessKey, secretKey, RegionEndpoint.APSoutheast1);

}

}

public bool UploadFile(string awsBucketName, string key, Stream stream)

{

var uploadRequest = new TransferUtilityUploadRequest

{

InputStream = stream,

BucketName = awsBucketName,

CannedACL = S3CannedACL.AuthenticatedRead,

Key = key

};

TransferUtility fileTransferUtility = new TransferUtility(this.client);

fileTransferUtility.Upload(uploadRequest);

return true;

}

public string GeneratePreSignedURL(string awsBucketName, string key, int expireInSeconds)

{

string urlString = string.Empty;

GetPreSignedUrlRequest request = new GetPreSignedUrlRequest

{

BucketName = awsBucketName,

Key = key,

Expires = DateTime.Now.AddSeconds(expireInSeconds)

};

urlString = this.client.GetPreSignedURL(request);

return urlString;

}

}

Now when I run the project, I am getting the following. When I click on the choose file button, folder explorer opens and I can select a image file there.

|

| File Upload |

|

| Uploaded File |

Happy Coding.

Regards,

Jaliya

I've been using this code and it's been so helpful to get files uploaded to Amazon S3 - I fear I might out of my depth tho. If I needed to send JSON data and uploaded files simultaneously to the controller how could I achieve that with this code? Any help would be appreciated!!

ReplyDeleteman...you are awesome...but this is really complicated stuff indeed!

ReplyDeletePls upload your example source code, pls

ReplyDeleteI am sorry, the download link was broken, just got it fixed!

Delete